From Keyword Research to Intent Mapping: The Structural Shift in AI Search

The dashboard was green. Every metric pointed up and to the right. Keyword rankings climbed from position seven to position three. The third-party tools that charged monthly fees reported exactly what they were paid to report: success. The client's marketing team celebrated the progress in their monthly review.

Then someone asked about the pipeline.

Traffic was down 60%. Lead generation had fallen off a cliff. The content that ranked beautifully was generating a fraction of the clicks it had six months earlier. The SEO strategy was working perfectly, according to every traditional metric, while the business outcomes it was supposed to drive were collapsing.

This is not an edge case. This is not a penalty or a competitor surge or a technical error. This is what structural obsolescence looks like.

Between 2024 and 2026, the entire infrastructure of keyword research lost its predictive power. The tools became unreliable. The metrics decoupled from business outcomes. The tactics optimized for a search interface that no longer exists.

This is the documentation of what happened and what emerged to replace it.

Part 1: Why Keyword Research Tools Became Unreliable After AI Overviews

In December 2024, Promodo published a study that quantified what SEO practitioners had been noticing for months: the keyword research tools everyone relied on had gone blurry.

The study analyzed 184 websites across different regions, niches, and traffic volumes. The baseline was Google Search Console. actual traffic data. The comparison was SEMrush, Ahrefs, and Similarweb. the tools agencies use to make content investment decisions, prioritize keyword targets, and justify client budgets.

The error rates:

- SEMrush: 61.58% average error

- Ahrefs: 48.63% average error

- Similarweb: 56.95% average error

Strategic decisions about which content to create, which markets to enter, and where to allocate six-figure budgets were being made based on estimates that no longer tracked reality.

The anomalies revealed the scale of the misalignment. SEMrush estimated 130,000 monthly visits for a site that was actually getting 50,000. Ahrefs underestimated traffic for sites where SERP positions changed faster than their crawler could update. Similarweb's accuracy dropped so severely for low-traffic sites that they pushed a major system overhaul in July 2024. an implicit admission that the problems were significant enough to require foundational changes.

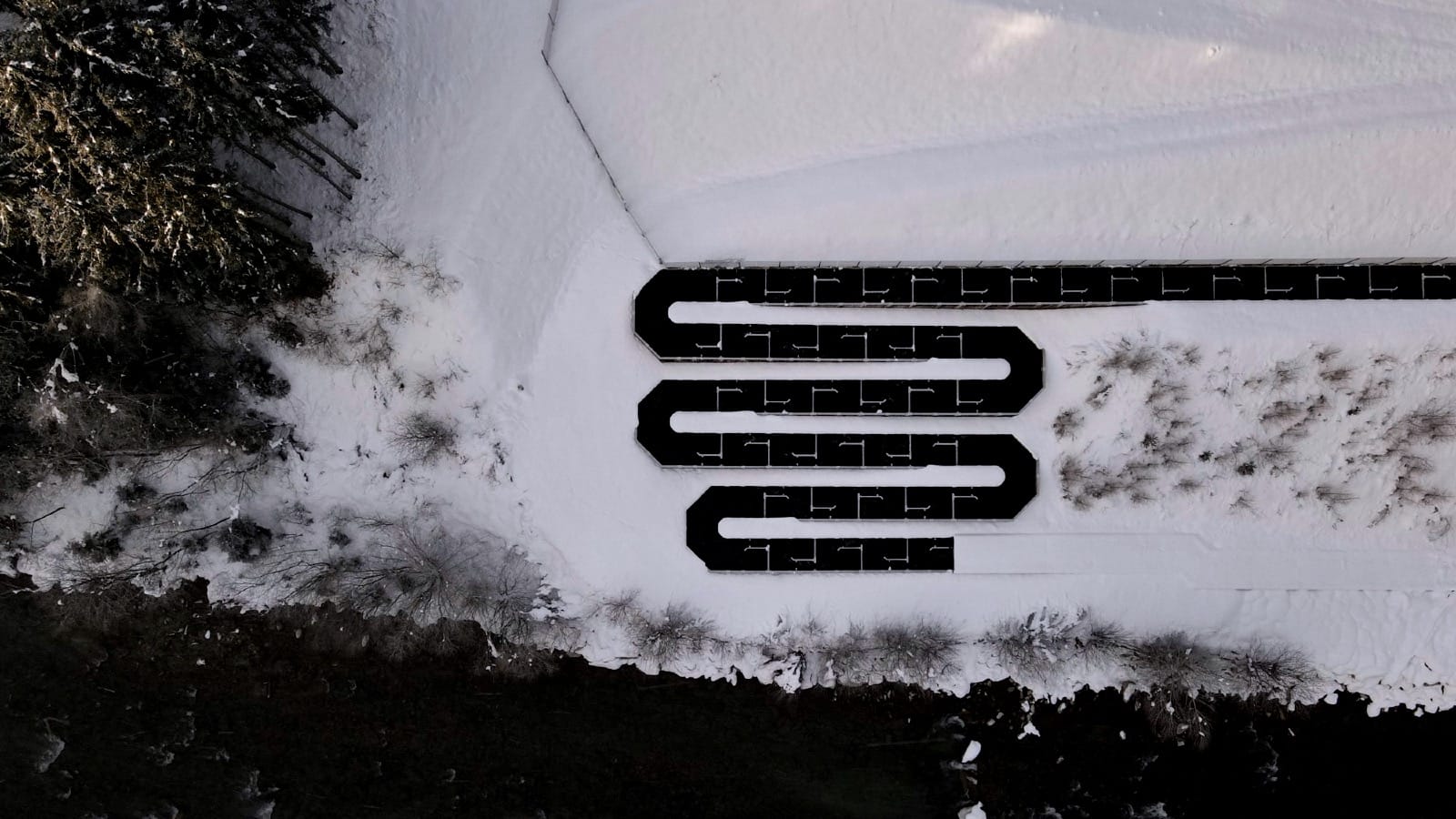

The tools weren't broken in the traditional sense. They were tuned for an interface that had changed. All three used variations of the same formula:

Traffic = Keyword Rankings × Search Volume × CTR

This formula assumes:

- SERP layouts are stable

- CTR curves by position are predictable

- Clicks actually happen

- Rankings correlate with visibility

None of these are true anymore.

The tools were built for the era of ten blue links. While they've attempted to account for AI Overviews, Featured Snippets, People Also Ask boxes, and Local Packs, the underlying challenge remains: their models were designed to predict clicks, but zero-click searches now account for 60-70% of all Google queries.

By late 2025, practitioners had shifted how they used the tools. The data became "directionally useful"—a signal for relative comparison rather than absolute forecasting.

Part 2: Why Rankings No Longer Predict Traffic in AI Search

On November 4, 2025, Seer Interactive published the results of a 15-month study tracking 3,119 search terms across 42 client organizations. The findings documented the complete decoupling of rankings from traffic.

Organic CTR for queries with AI Overviews:

- June 2024: 1.76%

- July 2025: 0.57% (the crash)

- September 2025: 0.61%

- Decline: 61%

Paid CTR for queries with AI Overviews:

- June 2024: 19.70%

- July 2025: 3.26%

- Decline: 68%

You could rank number one and get less than 1% click-through if an AI Overview appeared. You could maintain your position while losing two-thirds of your traffic. The correlation between ranking and visibility had been severed.

The business impact was immediate and brutal.

HubSpot: Between 2023 and 2025, organic traffic dropped 80% for some of their highest-traffic pages. Rankings held. Domain authority stayed strong. Revenue still grew 22% year-over-year in Q4 2024. not because SEO was working, but because brand equity and direct traffic sheltered them from complete collapse.

Mid-sized skincare brand: Ranked position two on Google for "best hyaluronic acid serums." Traffic went from 18,000 monthly visits to 5,600. a 69% decline. No penalty. No competitor surge. No technical errors. Just invisibility. Google's AI Overview started answering the question directly, and clicks became optional.

SaaS company: Traffic dropped 40% after Google rolled out SGE. The agency panicked and doubled down on traditional SEO tactics. more content, more links, more keyword optimization. Then they ran an AI visibility audit and discovered their documentation was being cited constantly in Gemini and ChatGPT Enterprise. Procurement teams were getting answers without clicking through. Traffic was down, but conversions were up. They were still winning. the old metrics just couldn't see it.

G2.com: Lost approximately 80% of SEO traffic between 2023 and 2026. A business model heavily reliant on organic comparison traffic. when the retrieval layer shifted, the entire model fractured.

The pattern was consistent: green dashboards, red pipelines. Traditional metrics showed success while business outcomes disappeared. Rankings held while traffic evaporated, and the tools that were supposed to measure performance had no framework for understanding what was happening.

Part 3: How AI Search Queries Differ from Traditional Keyword Queries

Traditional search queries average 3-4 words. "Best CRM software." "SEO tools." "Project management app."

AI search queries average 23 words, according to data from Onely's December 2025 analysis of conversational search patterns.

Users are no longer typing keyword strings. They're asking full questions with context, constraints, and embedded intent:

"I'm a 12-person creative agency in Austin managing client work in Asana but our project timelines keep slipping because account managers can't see designer capacity in real-time. what tools integrate with Asana for resource planning that don't require a dedicated project manager to maintain?"

You cannot keyword-research this query. You cannot identify a "target keyword" with commercial intent and build a page around it. The entire methodology breaks down.

More than 70% of AI search queries don't fit into the traditional intent categories of informational, transactional, or navigational. They're multi-layered, task-oriented, and conversational. They assume the AI system understands context, can synthesize information across sources, and will provide a direct answer rather than a list of links.

Voice search accelerated the shift. By 2026, voice queries were approaching 8 words on average. double the traditional search length. Mobile users were typing longer queries because autocomplete and conversational interfaces made it easier to provide full context than to guess the right 2-3 word combination.

The keyword research infrastructure was built for an interface where people optimized their queries for search engines. That interface has evolved. Search engines now optimize for natural language, and the tools built to analyze keyword strings have no framework for understanding paragraphs.

The collapse of keyword research didn't just affect organic search. It destabilized paid targeting models built on the same assumption: that intent could be reduced to a string. As queries expanded into paragraphs and AI systems began interpreting context rather than matching phrases, contextual advertising quietly regained strategic importance. Not because it's new, but because it aligns with how machines now interpret meaning. When intent stopped behaving like a keyword, targeting models built on keyword logic fractured too. Context, not phrase matching, became the durable signal.

Part 4: What Replaced Keyword Research in AI Search Strategy

Practitioners shifted away from traditional keyword-volume targeting toward semantic and intent-based methodologies.

They're not calling it "Intent Mapping." They're calling it:

- GEO (Generative Engine Optimization)

- AEO (Answer Engine Optimization)

- Topic clusters

- Entity-based SEO (AI SEO)

- Passage-level optimization

- Semantic clustering

But the underlying shift is identical: from optimizing for rankings to optimizing for retrieval and citation.

The new metrics:

- AI citation rate (how often your content is cited in AI-generated answers)

- Brand mention share of voice (percentage of AI responses that reference your brand)

- Visibility score in AI answers (not SERP position)

- Sentiment in AI responses (how you're described when cited)

- Conversion rate by traffic source (AI-driven vs. traditional organic)

- AI-attributed leads (pipeline sourced from conversational search)

The new tools:

- AI visibility trackers (Profound, ZipTie)

- Semantic clustering platforms

- Prompt testing tools

- Citation monitoring systems

- AI bot analytics (tracking Claude, GPT, Gemini crawlers)

The new roles:

- GEO Specialist

- Answer Engine Optimizer

- AI Search Strategist

What emerged doesn't look like traditional SEO. It looks like structured data architecture, knowledge graph development, and content designed for extraction rather than click-through.

Part 5: Semantic Clustering, Entity Mapping, and Intent Networks

The practitioners who figured this out early didn't start with new tools. They started with new assumptions.

1. Semantic Clustering

Traditional keyword research creates separate pages for keyword variations. "Cloud security software." "Cloud security compliance tools." "Cloud security solutions for enterprises." Three keywords, three pages, three chances to rank.

Semantic clustering treats these as a single concept. Instead of fragmenting content across multiple thin pages optimized for individual keywords, you create one authoritative piece that covers the entire topic comprehensively. This signals expertise to AI systems, which prioritize depth and coherence over keyword matching.

AI systems don't match keywords. they match meaning. A page optimized for "best project management software" will surface for queries about "tools for managing client work," "collaborative task tracking platforms," or "how to organize team projects" if the semantic relationship is clear. The keyword is irrelevant. The concept is what matters.

2. Entity Relationship Mapping

At the core of semantic search are entities. unique, well-defined people, places, objects, and concepts that form the building blocks of knowledge. Entity relationship mapping is the process of explicitly defining these entities and the connections between them.

A real estate agency doesn't just have "listings." They have properties (entities) with relationships to locations (entities), agents (entities), neighborhoods (entities), and schools (entities). These relationships are defined in a subject-predicate-object format: "Property A [subject] is located in [predicate] Neighborhood B [object]."

Schema markup makes these relationships machine-readable. AI systems use this structure to understand context, validate claims, and determine what content is authoritative for a given entity. Without explicit entity definitions, your content is semantically ambiguous. retrievable only if it happens to match lexically.

3. Intent Networks

B2B purchasing decisions involve 6-15 stakeholders across an 18-month buying cycle. The champion researches solutions. The technical evaluator investigates integration requirements. The CFO analyzes ROI. The procurement team verifies compliance. The end users assess usability.

Each stakeholder has different questions at different stages. Traditional keyword research optimizes for the initial exploratory query. "best CRM for small business". and ignores the 47 questions that follow.

Intent networks map the entire journey. They connect disparate queries across time and stakeholders, creating a content strategy that addresses the full spectrum of the buying committee's needs. This is not keyword targeting. This is modeling how decisions are actually made and ensuring your content is retrievable at every inflection point.

AI systems synthesize information across these networks. When a CFO asks ChatGPT, "What's the total cost of ownership for Salesforce versus HubSpot including implementation, training, and three-year licensing?" the AI pulls from pricing pages, case studies, implementation guides, and user reviews. not from a single keyword-optimized landing page.

If your content exists only at the top of the funnel, you're invisible to 90% of the decision-making process.

Why Keyword-Based Forecasting Fails in AI-Mediated Search

Traditional keyword research assumed stable SERPs, predictable CTR curves, clicks that actually happened, and rankings that correlated with visibility.

These assumptions have become less reliable as interfaces continue to evolve. The tools have updated their models, but error rates remain significant as new AI features, ad formats, and answer types continue to emerge. The metrics often disconnect from business outcomes. The tactics were optimized for an interface that continues to change.

Some companies that shifted early toward semantic and retrieval-based optimization are seeing less traffic but higher conversions. Others that rely exclusively on traditional SEO metrics are finding those dashboards less predictive of pipeline.

What replaced keyword research isn't a single methodology or a branded framework. It's a fundamental reorientation away from rankings and toward retrieval. Away from keywords and toward concepts. Away from individual pages and toward semantic networks.

The practitioners who made the shift aren't calling it the same thing. But they're all solving the same problem: how to be discoverable when AI systems mediate access to information, clicks are optional, and rankings are decorative.

Sources & Research

- Promodo (2024) – Comparative analysis of SEMrush, Ahrefs, and Similarweb traffic estimation accuracy vs. Google Search Console baseline across 184 websites

- Seer Interactive (2025) – 15-month CTR impact study tracking AI Overviews across 3,119 search terms and 25.1M impressions

- Ahrefs (2026) – AI Overview click reduction longitudinal study showing acceleration from 34.5% to 58% impact

- Onely (2025) – Conversational search query length analysis documenting shift from 3-4 word to 23-word average queries

- SEOmator (2026) – Voice search query length trends and mobile search behavior analysis

- LinkNow Media (2025) – Query complexity evolution and 7-8 word average trend documentation

- SparkToro/Similarweb (2024) – Zero-click search rate measurement and longitudinal tracking

- Flux8Labs (2026) – Business impact case studies including SaaS conversions, skincare brand traffic loss, and AI visibility audits

- GetPassionFruit (2025) – HubSpot and Forbes organic traffic decline case studies

- SearchEngineLand (2024) – Topic cluster methodology and semantic SEO framework documentation

- TryProfound (2025) – GEO (Generative Engine Optimization) framework and AI visibility tracking methodology

Member discussion