How to Get Your Business Found by AI: The 2026 Guide to Staying Visible in ChatGPT, Perplexity, and Beyond

A software buyer sat in a quiet office with a legal pad half full of notes. They asked ChatGPT to help sort out project management tools. The model listed five. On another tab, Google pulled up a set of names that didn’t match. The vendor who had spent years climbing the rankings didn’t appear in the conversation at all.

It keeps happening. Different industries. Different buyers. The same absence in the same place.

Early on, the systems behaved like librarians. They pointed to what was already there. Lately they have been listening in. They hear the side conversations now.

A recent eMarketer survey of B2B buyers found that nearly half are using AI tools for market research now. Four in ten use it to vet vendors. And a substantial chunk use AI more than Google when they are researching purchases. The traditional funnel is collapsing. Discovery. Evaluation. Shortlisting. A single conversation now. If you are not in that conversation, you vanish before you know there was anything to be considered for.

47% of B2B buyers use AI tools for market research and vendor discovery

38% use AI for vetting and shortlisting vendors

22% now use AI more than traditional search when researching purchases

The traditional funnel is collapsing. Discovery, evaluation, and vendor comparison that used to happen over weeks across multiple touchpoints now happens in a single chatbot conversation.

If you're not in that conversation, you're eliminated before knowing there was anything to be considered for.

Source: eMarketer, October 2025

Why AI Models Ignore What Used to Work

For years, the SEO playbook was relatively stable. Publish good content. Build backlinks. Keep your site technically clean. Monitor your rankings. The work was iterative but predictable.

That model assumed your website was the center of your digital footprint. If people found your site, they found you. But AI systems do not work that way.

Research from Semrush analyzing millions of AI citations found that nearly ninety percent of the content these models reference was published in the last two years. Half of it came from articles updated in the last eleven months. A page from two years ago sits untouched while a thin update from last winter rises to the top of an answer. The models reach for whatever feels new, even when the older work still stands.

Your comprehensive guide from 2023 loses to a competitor’s thinner update from 2025. Even if yours is better researched. Even if it has more backlinks. The date matters more than you would think.

And it does not stop there. Different AI tools behave completely differently. ChatGPT cites about three sources per answer and leans heavily on Wikipedia and Reddit. Perplexity cites twice as many sources and loves niche industry directories and customer reviews. Gemini pulls from your own website more than the others do, but it also indexes YouTube and LinkedIn more aggressively.

There is no unified algorithm to optimize for anymore. You are not trying to rank in one place. You are trying to be present and credible across a dozen different retrieval systems, each with its own trust model.

Executives are asking for this now. A Search Engine Land survey of SEO professionals found that ninety-one percent said their executives are demanding AI search visibility. But sixty-two percent said it is driving less than five percent of revenue. The gap exists because most organizations are applying tactics without systems. They are treating this like SEO circa 2010. A checklist of one-time fixes. It does not hold up anymore.

Research tracking millions of AI citations found that 89% of referenced content was published in the last two years. Half came from articles updated in the last eleven months.

A comprehensive guide from 2023 loses ground to a thinner competitor update from 2025—even when the older work is better researched, better linked, and more authoritative.

The models learned to favor recency during training. They kept the habit.

Source: Semrush, 2025

What Actually Shows Up in AI Answers

After watching how this plays out across industries, a pattern shows up. The organizations appearing in AI citations are not doing one clever thing. They are running four interconnected systems.

Why AI Models Prefer Fresh Content

Most businesses are still starting with keywords. But the AI models do not care about keywords. They care about questions. Real questions that people ask at different stages of figuring out whether they need you.

You can see the shape of the questions shift as people move from problem to vendor. The material has to meet them at each point. No surprise in that. What has changed is what happens after.

Old pages go stale faster than they used to. You can see it in how the models behave. Anything untouched for a few months starts slipping from view. Some pages need monthly attention. Others last a little longer. A few can sit until the season changes. The pattern becomes obvious once you watch what the models forget first.

Triggers start to matter. A competitor begins getting cited and you do not. An algorithm change hits your category. A major industry event shifts the conversation. A changed date does not fool anything. The models read the material the way people do. They notice when the substance has moved.

A content calendar stops being just a publication schedule. It becomes a maintenance schedule. More time ends up going to renewing what exists instead of adding something new. Teams watch which pieces drive pipeline and give those priority. The work becomes cyclical instead of linear.

How Social Posts and Community Threads Become Training Data

Analysis from Profound of what ChatGPT cites most often found Wikipedia at the top. Nearly eight percent of all citations. Reddit came in second. Forbes and G2 tied for third. This says something about what the model considers authoritative.

Wikipedia sits outside your control. But you can contribute to relevant articles in your industry if your expertise is legitimate. You can be cited by sources that Wikipedia itself cites. You can make sure your company has a Wikipedia page if you meet their notability standards.

Then there is the social layer. This is the part that slips past most people.

A stray LinkedIn post ends up in the corpus now. Same with a Reddit thread you answered at midnight. A Quora response. A YouTube video transcript. The models absorb it the same way they do anything else.

Perplexity cites Reddit more than any other platform. Six point six percent of its citations come from there. That is not a distribution play. That is a corpus play. What people say about you in community discussions directly shapes how AI models read your authority.

It gives social posts a different weight. Not engagement. The ability to be cited.

Brand mentions across these platforms start to matter in new ways. Not just to engage. To correct misinformation. To take part in discussions where your product or approach is mentioned. Those discussions are training data.

The technical layer sits underneath. Schema markup that tells AI systems who you are and what you do. Consistent NAP across every property where you are listed. Clear service pages that map to how people actually search. Author profiles for your experts with credentials and track records visible.

An entity graph gets built this way. Not just a website.

How ChatGPT, Perplexity, Gemini, and Claude Pick Their Sources

Yext analyzed thousands of citations to see what each AI platform trusts. Three distinct patterns showed up.

ChatGPT trusts what the internet agrees on. Nearly half its citations come from third-party consensus sources. Yelp. TripAdvisor. Review platforms where lots of people have weighed in. Companies that appear in ChatGPT often have strong third-party validation behind them.

Perplexity trusts industry experts and customer reviews. It leans on niche directories. Industry-specific platforms where practitioners gather. The companies that show up tend to have a footprint in those specialized corners of the web.

Gemini trusts what your brand says. More than half its citations come from brand-owned websites. It wants clean structure on your domain. Schema markup. Clear service pages. Well-organized content. The sites that show up there usually have their own house in order first.

You cannot win all three with one approach. ChatGPT looks for outside validation. Perplexity looks for embedded expertise in communities. Gemini looks at whether your own house is in order.

Over time the work spreads outward. A site structured properly. Presence in industry directories and review platforms. Real participation in community discussions. Third-party citations in news and industry publications. A site is only one part of it.

Quarterly audits start to look different. Teams check where they are listed and whether that information is current. They pay attention to which platforms each AI model pulls from for key queries. They build distribution checklists for major content pieces. A blog post by itself does not carry far. The same material ends up as LinkedIn threads and Reddit conversations and podcast appearances.

The pattern becomes clear after a while. Material that used to live in one place now needs to exist in many.

Analysis of thousands of AI citations revealed three distinct trust patterns:

ChatGPT (75% market share) Trusts what the internet agrees on 48.7% of citations from third-party consensus sources (Yelp, TripAdvisor, review platforms)

Perplexity (6.6% market share) Trusts industry experts and customer reviews Heavily favors niche, industry-specific directories and community discussions

Gemini (13% market share) Trusts what your brand says 52.1% of citations from brand-owned websites

You can't win all three with one strategy. None of them follow the same trail.

Source: Yext, October 2025

What Each Platform Actually Does

ChatGPT added search in late 2024. The rollout came with a quiet promise to surface high-quality reporting. You can see it in how it leans on Wikipedia and the big newswires. Market share sits around seventy-five percent when you include Microsoft Copilot integration. Eight hundred fifty-six million users as of late 2025.

Perplexity built its entire platform around real-time search and transparent citation. It presents itself as a research tool built around explicit sourcing. The company launched a Search API covering hundreds of billions of web pages. Every answer includes numbered citations. Market share is smaller but growing. Six point six percent as of late 2025.

Claude introduced a Citations API in mid-2025 and added real-time web search shortly after. The focus is on verifiable, trustworthy outputs. Its most-cited sources include news outlets, academic publications, and financial sites. Market share around three point six percent.

Gemini has direct integration with Google Search through what it calls grounding. When the search tool is enabled, it handles the entire workflow. It returns detailed metadata about queries, results, and citations. Market share sits around thirteen percent.

The variation becomes obvious the longer you trace where each system finds its material. None of them follow the same trail.

How to Tell Whether AI Is Showing You at All

AI visibility is harder to measure than traditional SEO. There are no keyword rankings to track. No position one to aim for. The citations shift depending on context and timing.

There is no clean dashboard for this yet. People still open each chatbot in a separate tab and ask the same ten questions each month. They jot down who appears. They notice when a name vanishes.

They end up watching the same few signals. What shows up. How the brand sounds. Whether any of it reaches the business.

First is visibility. Are you getting cited when people ask about your category. Ten to twenty key queries per month across ChatGPT, Perplexity, Gemini, Claude. Names get written down. Tools are emerging for this, but manual checking still catches what the tools miss.

Second is brand. Branded search volume. Sentiment in AI responses. Correlation with brand recall studies or share-of-voice metrics in category-defining queries. Leading indicators. They do not prove causation but they show correlation.

Third is business impact. Referral traffic from AI platforms gets tracked, but it is undercounted. Many citations do not include links. So qualified lead volume from organic channels matters too. Pipeline changes even when Google traffic stays flat. Leads mention finding a company through ChatGPT or seeing it in a Perplexity answer.

Adobe’s research on AI referral traffic found something telling. It is about one percent of total web traffic right now. Tiny. But visitors who arrive via AI citations convert at four times the rate of traditional search visitors. Lower bounce rates. Longer sessions. Higher revenue per visit in retail and travel.

When someone clicks through from an AI answer, they have already been filtered. The AI has synthesized information from multiple sources and placed you as relevant. The competition happens before anyone ever sees your site.

And that one percent is growing fast. Ten times growth in eight months according to the same Adobe data. Some analysts think AI referral traffic could surpass traditional search traffic by 2028. Maybe that is optimistic. The direction is clear either way.

AI referral traffic currently represents just 1% of total web traffic.

But visitors arriving via AI citations:

- Convert at 4x the rate of traditional search visitors

- Show 23% lower bounce rates (retail)

- Spend 41% longer on site (retail)

- Generate 80% more revenue per visit (travel)

That 1% grew 10x in eight months. Some analysts predict AI search traffic could surpass traditional search by 2028.

Source: Adobe Digital Insights, 2025

How a Real Company Became More Visible to AI

Take a boutique supply chain consultancy. Mid-market manufacturing clients. Strong reputation in its niche. It did the traditional SEO work. Ranked well for supply chain consulting and related terms. But when a VP of Operations asked ChatGPT for recommendations, it did not appear.

So they built the systems.

They created a comprehensive guide to supply chain resilience. Mapped it to specific pain points their clients actually asked about. Inventory management. Supplier risk. Logistics disruption. They put it on a quarterly refresh cycle. New data. Recent case studies. Emerging trends. The guide stayed current.

Senior consultants started publishing on LinkedIn. Not promotional posts. Technical insights. The kind of thing another practitioner would reference when explaining a concept. They showed up in supply chain subreddits offering genuinely helpful advice. They were featured in Supply Chain Dive and Logistics Management. Their consultants appeared on logistics podcasts.

They got listed in B2B directories. Clutch. UpCity. They made sure their company schema clearly defined their services, locations, and team expertise. They checked monthly whether AI tools cited them when asked about supply chain consulting for mid-market manufacturing.

Six months in, they started showing up. Not always. Enough that new clients mentioned it in passing. The leads that came through that channel were further along in their thinking. Further along by the time they reached out.

What’s Missing From Most AI Search Advice

Most guidance stops at the surface. Schema here. Citations there. Freshen the post. Wait a month. Try again. The machinery underneath rarely gets discussed.

The survey data tells the story. Ninety-one percent of executives demanding visibility. Sixty-two percent of SEOs seeing minimal revenue impact. Analysis of guidance from Moz, Ahrefs, Search Engine Journal, and Search Engine Land shows the pattern. Strong tactical recommendations. Weak operational frameworks.

Moz and Ahrefs have done solid work defining tactics. Search Engine Journal correctly points out that this requires changes in workflow. What is missing are the ways to put it into practice.

What is outlined here is meant to turn those ideas into work that actually runs. Not replacing existing tactical guidance. Adding the layer that lets those tactics keep going without collapsing.

How AI Went From Indexing to Listening

The systems used to index. Now they listen and confirm.

For decades, search engines catalogued what existed. They ranked pages based on links and keywords and metadata. Mechanical. Predictable.

These AI systems are doing something different. They pick up on what communities trust. What people vouch for without thinking much about it. What gets mentioned when real humans talk to each other. They are not just cataloguing anymore. They are trying to understand.

You start to see how scattered the evidence is. A review here and there. A forum thread half-buried. A line from a podcast you barely remember. It all keeps drifting, never really settling in one spot.

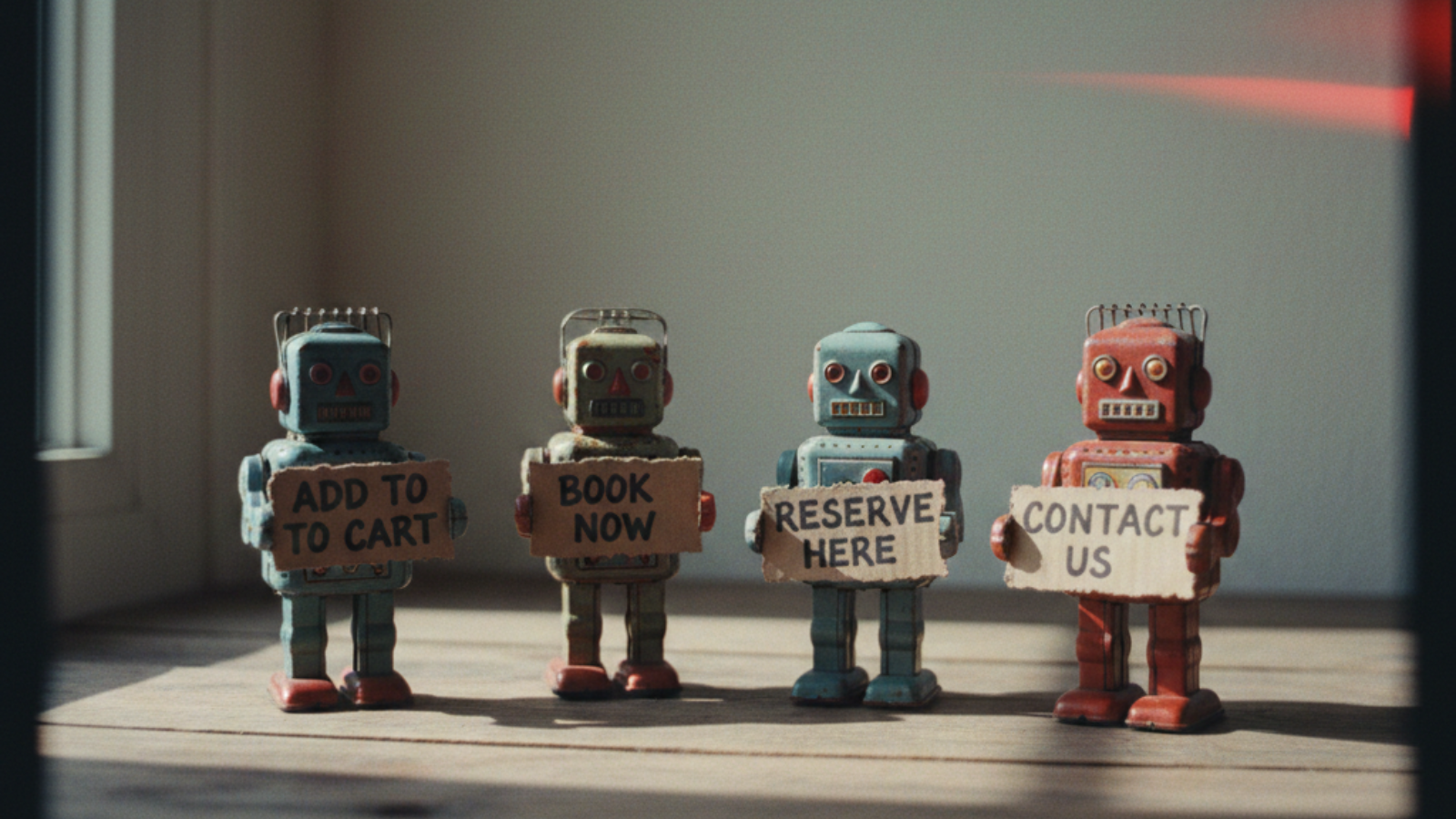

We help businesses build systematic visibility across the entire retrieval layer. Not just one platform, not just one tactic. The kind of infrastructure that holds up when the models shift again.

If that's what you're looking for, let's start a conversation.

Member discussion